We do optimization. That is what we do and I like to think we are pretty darn good at it. Sometimes companies come to us but instead of embracing the process of optimization they expect, since we are the experts, that we have some magic bullet that will give them BIG wins on almost every test and overnight. Sure we see the big wins and we get them fairly often, but what most people don’t talk about is all the losing tests that you get in the process.

Today I am going to shed some light on why failed split tests are important and how they can ultimately help you get more of those big wins.

Optimization is a learning process. It is more than making a list of things you want to test and throwing up a different button color and hope you see some improvement. Optimization like I said, is a process. A process of learning how visitors interact with a certain site/landing page, what elements they respond to the best, testing your way to a better user experience and ultimately increasing conversions as a result.

From that learning process, you will get losing tests but it is what you do with those losing tests that is the biggest catalyst. With every test, you should be confirming assumptions and getting smarter about your visitors to help you better deliver your product or service.

A specific example of this is on a recent test we conducted on the mobile shopping experience for an e-commerce store.

We found by looking at the specific data that many shoppers on mobile were falling off during the checkout process. Given that over 50% of the orders for the store came from mobile this was an important area to focus. After further research, we found that there were just too many steps in the mobile experience. Visitors had to click to expand options such as size and color to make their selection.

In an effort to streamline the process we decided to test expanding the options to alleviate the “click” to make the selection. On mobile, we are used to scrolling so having a longer page wasn’t as much of an issue.

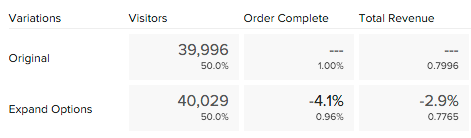

Early on the test showed extreme promise but as more time passed the expanded option started to slip and ended up losing by a small margin.

Most people when optimizing would chalk that up to a failed experiment and move onto the next test. Instead, we learned something from this test. Since it showed early promise and didn’t lose by that much of a margin we knew we were onto something. They obviously liked something about the expanded option.

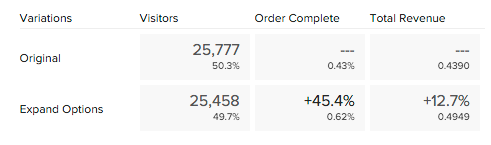

We then went back to the drawing board and created a variation of the expanded version of the mobile shopping experience but this time changed a bit of the styling and only expanded some of the key areas.

Basically we took something that had promise and tried to make it better based on the data collected.

The result?

The new test variation ended up increasing new orders by more than 45% and increased revenue per visitor by 12.7%.

Not a bad little test result, all because we learned something, took the information from what was potentially a losing test and made it better.

So before you get discouraged in seeing some losing variations remember that optimization is a process and we often learn more from the losing tests than we do the winners. Embrace the process, have some patience and learn from every test to come out a winner in the end.

Have something to add? Leave us a comment.

Be sure to like, share and subscribe.